Kubeflow

- Kubeflow Charmers | bundle

- Cloud

| Channel | Revision | Published |

|---|---|---|

| latest/candidate | 294 | 24 Jan 2022 |

| latest/beta | 430 | 30 Aug 2024 |

| latest/edge | 423 | 26 Jul 2024 |

| 1.9/stable | 432 | 03 Dec 2024 |

| 1.9/beta | 420 | 19 Jul 2024 |

| 1.9/edge | 431 | 03 Dec 2024 |

| 1.10/beta | 433 | 24 Mar 2025 |

| 1.8/stable | 414 | 22 Nov 2023 |

| 1.8/beta | 411 | 22 Nov 2023 |

| 1.8/edge | 413 | 22 Nov 2023 |

| 1.7/stable | 409 | 27 Oct 2023 |

| 1.7/beta | 408 | 27 Oct 2023 |

| 1.7/edge | 407 | 27 Oct 2023 |

juju deploy kubeflow --channel 1.9/stable

Deploy Kubernetes operators easily with Juju, the Universal Operator Lifecycle Manager. Need a Kubernetes cluster? Install MicroK8s to create a full CNCF-certified Kubernetes system in under 60 seconds.

Platform:

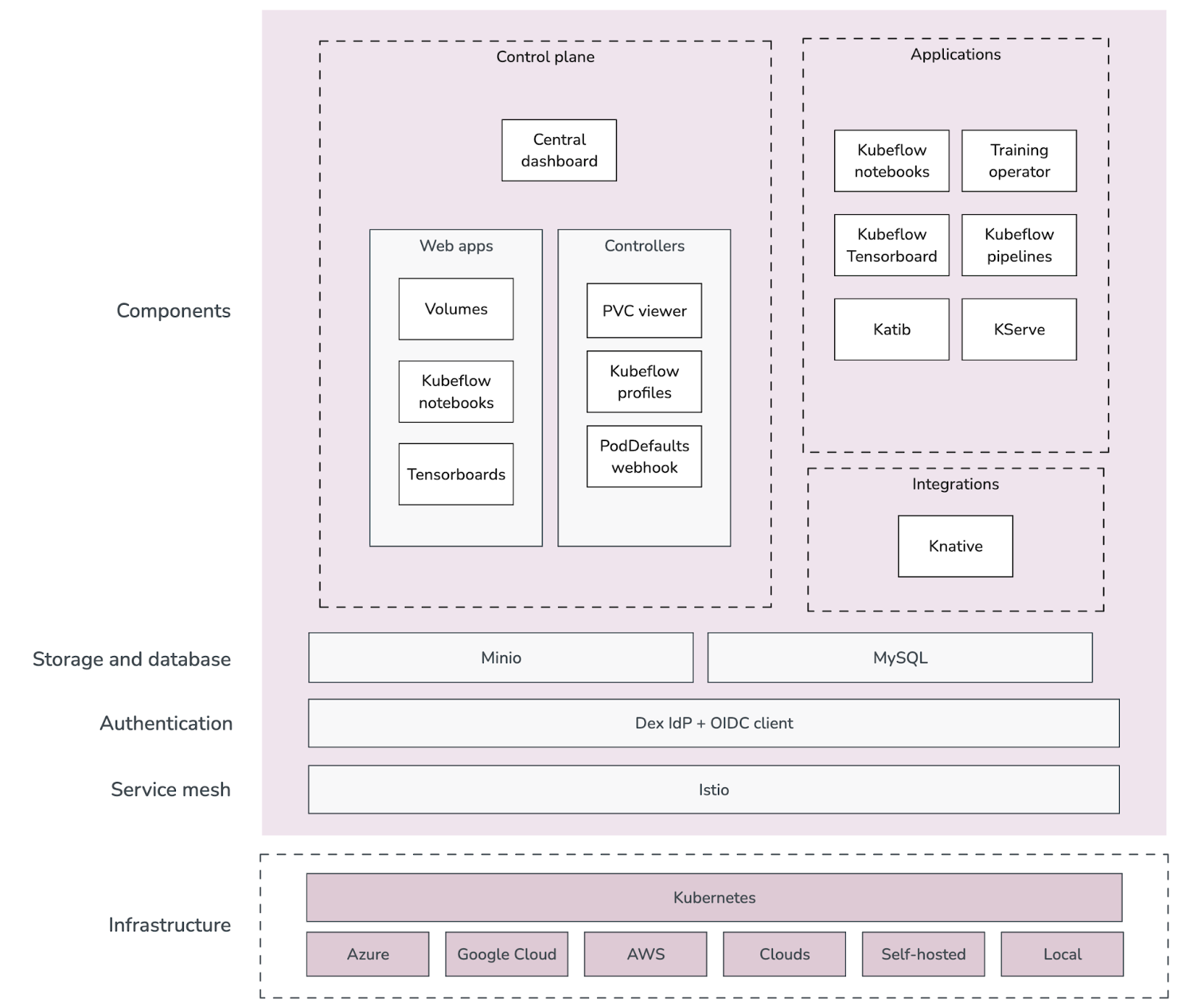

This guide describes the Charmed Kubeflow (CKF) system architecture, its components, and its integration with other products and tools.

Minimum system requirements

CKF runs on any Cloud Native Computing Foundation (CNCF)-compliant Kubernetes (K8s) with these minimum requirements for the underlying infrastructure:

| Resource | Dimension |

|---|---|

| Memory (GB) | 16 |

| Storage (GB) | 50 |

| CPU processor | 4-core |

CKF also works in GPU-accelerated environments. There are no minimum requirements regarding the number of needed GPUs as this depends on the use case. Refer to the following guides for more details:

System architecture overview

Charmed Kubeflow is a cloud-native application that integrates several components at different layers:

- At the infrastructure level, it can be deployed on any certified Kubernetes using from local to public clouds.

- The service mesh component is Istio. It is used for traffic management and access control.

- The authentication layer is built upon Dex IdP and an OIDC Client, usually

OIDC Authserviceoroauth2 proxy. - The storage and database layer comprises MySQL and MinIO, used as the main storage and S3 storage solutions respectively. They are commonly used for storing logs, artefacts, and workload definitions.

The main components of CKF can be split into:

- Control plane: responsible for the core operations of Charmed Kubeflow, such as its User Interface (UI), user and authorization management, and volume management. The control plane is comprised of:

- Web applications: web UI components that provide a point of interaction between the user and their ML workloads.

- Central dashboard: displays the web applications.

- Controllers: business logic to manage different operations, such as profile or volume management.

- Applications: enable and manage different user workloads, such as training, experimentation with notebooks, ML pipelines, and model serving.

- Integrations: Charmed Kubeflow is integrated with components that may not be always enabled in upstream Kubeflow, like Knative for serverless support.

Components

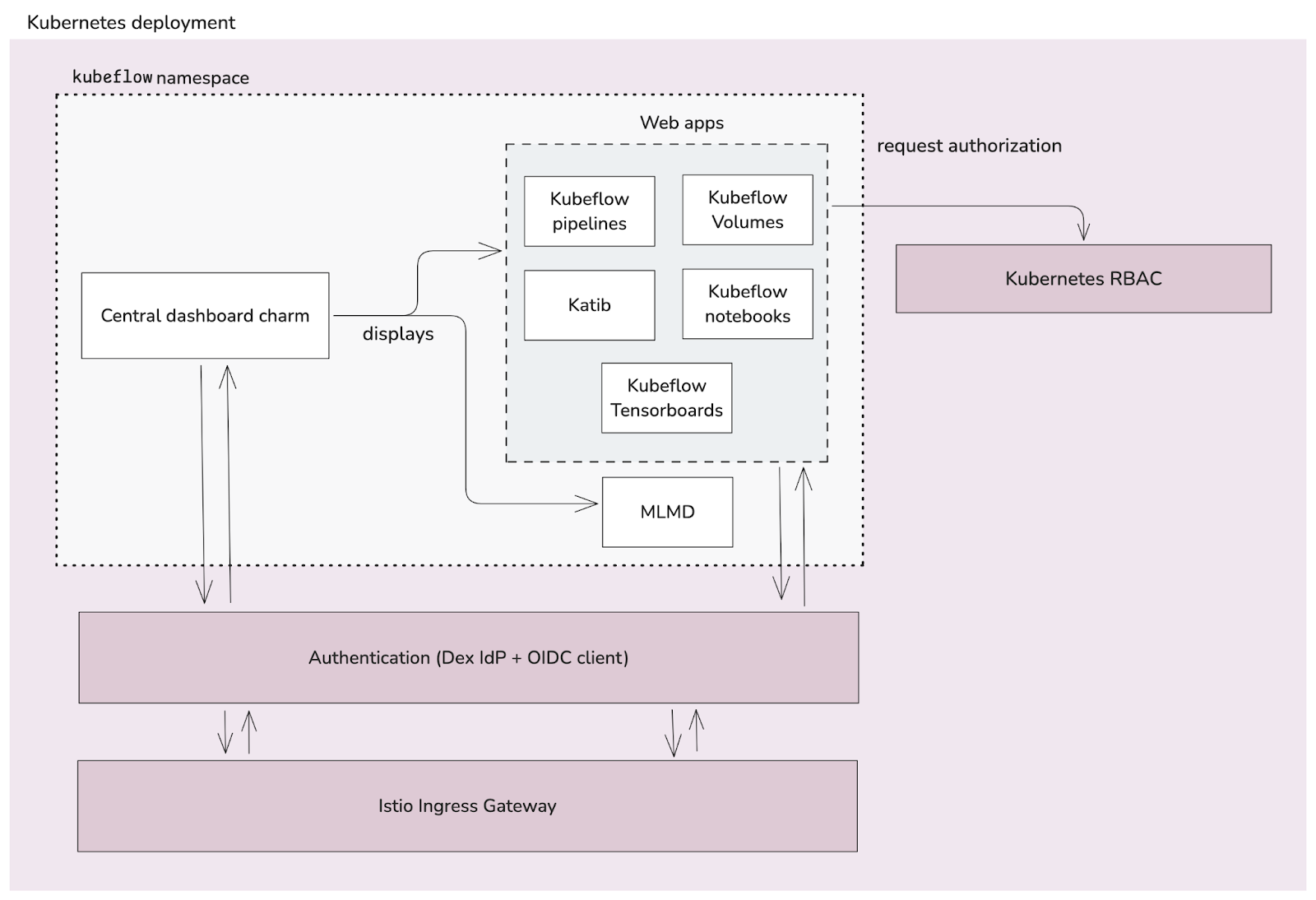

Central dashboard and web apps

The central dashboard provides an authenticated web interface for Charmed Kubeflow components. It acts as a hub for the components and user workloads running in the cluster. The main features of the central dashboard include:

- Authentication and authorization based on Kubeflow profiles.

- Access to user interfaces of Kubeflow components, such as Notebook servers or Katib experiments.

- Ability to customize the links for accessing external applications.

The following diagram shows the dashboard overall operation and how it interacts with web and authentication applications:

From the diagram above:

- The central dashboard is the landing page that displays the web applications. It is integrated with Istio, Dex and the OIDC client to provide an authenticated web interface.

- The web applications give access to the various components of CKF. They are also integrated with Istio, Dex and the OIDC client to provide authentication.

- The web applications also take an important role in how users interact with the actual resources deployed in the Kubernetes cluster, as they are the ones executing actions, such as

create,delete,list, based on Kubernetes RBAC.

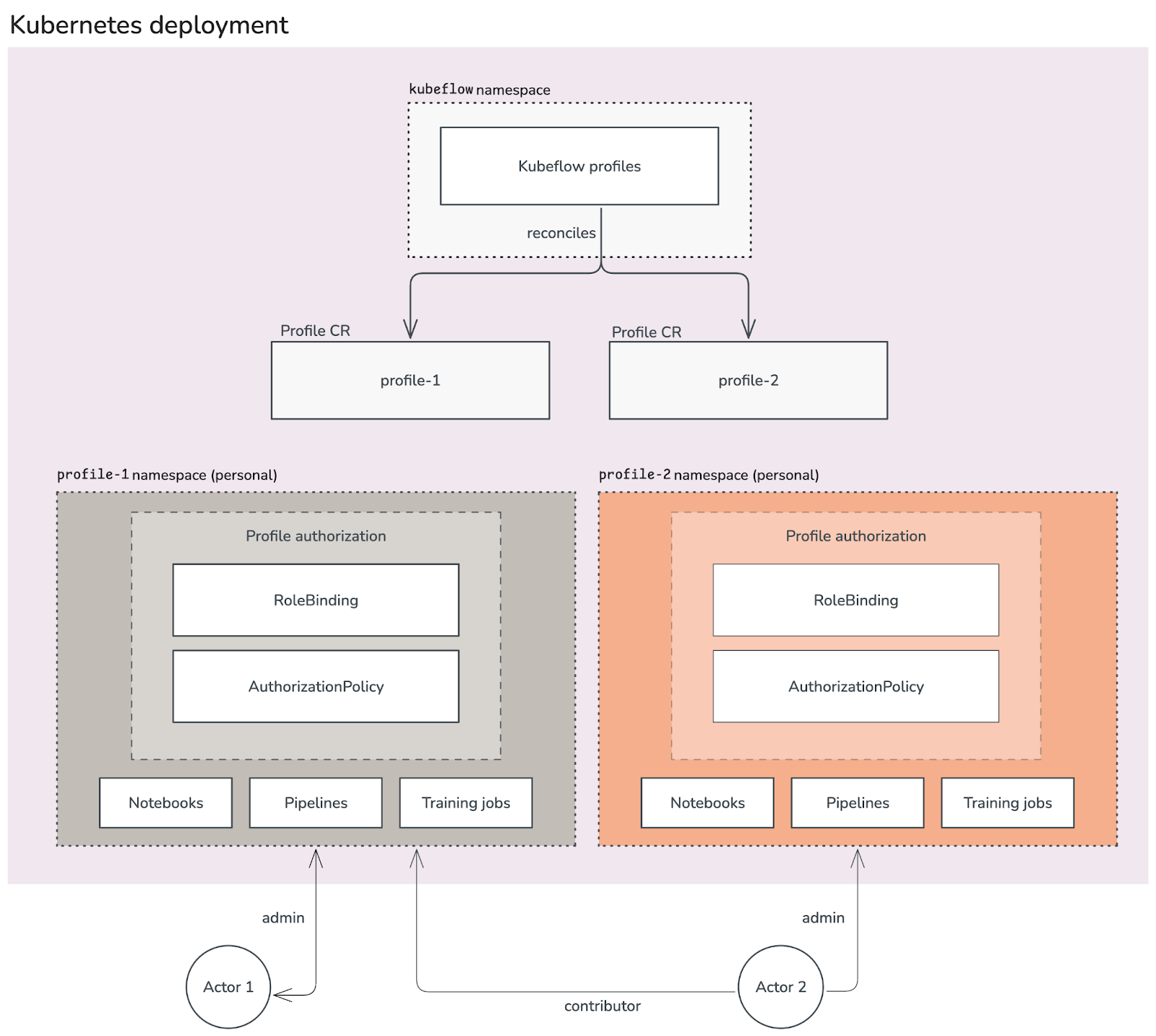

Profiles

User isolation in CKF is mainly handled by the Kubeflow profiles component. In the Kubeflow context, a profile is a Kubernetes Custom Resource Definition (CRD) that wraps a Kubernetes namespace to add owners and contributors.

A profile can be created by the deployment administrator via the central dashboard or by applying a Profile Custom Resource. The deployment administrator can define the owner, contributors, and resource quotas.

From the diagram above:

- Kubeflow profiles is the component responsible for reconciling the Profile Custom Resources (CRs) that should exist in the Kubernetes deployment.

- Each profile has a one-to-one mapping to a namespace, which contains:

- User (admin and contributors) workloads, such as notebooks, pipelines and training jobs.

RoleBindingsso users can access resources in their namespaces.AuthorizationPoliciesfor access control.

- Different actors can access different profiles depending on their role:

- admins can access their own namespaces and the resources deployed in them. They can also modify contributors.

- contributors have access to the namespaces they have been granted access to, but cannot modify the contributors.

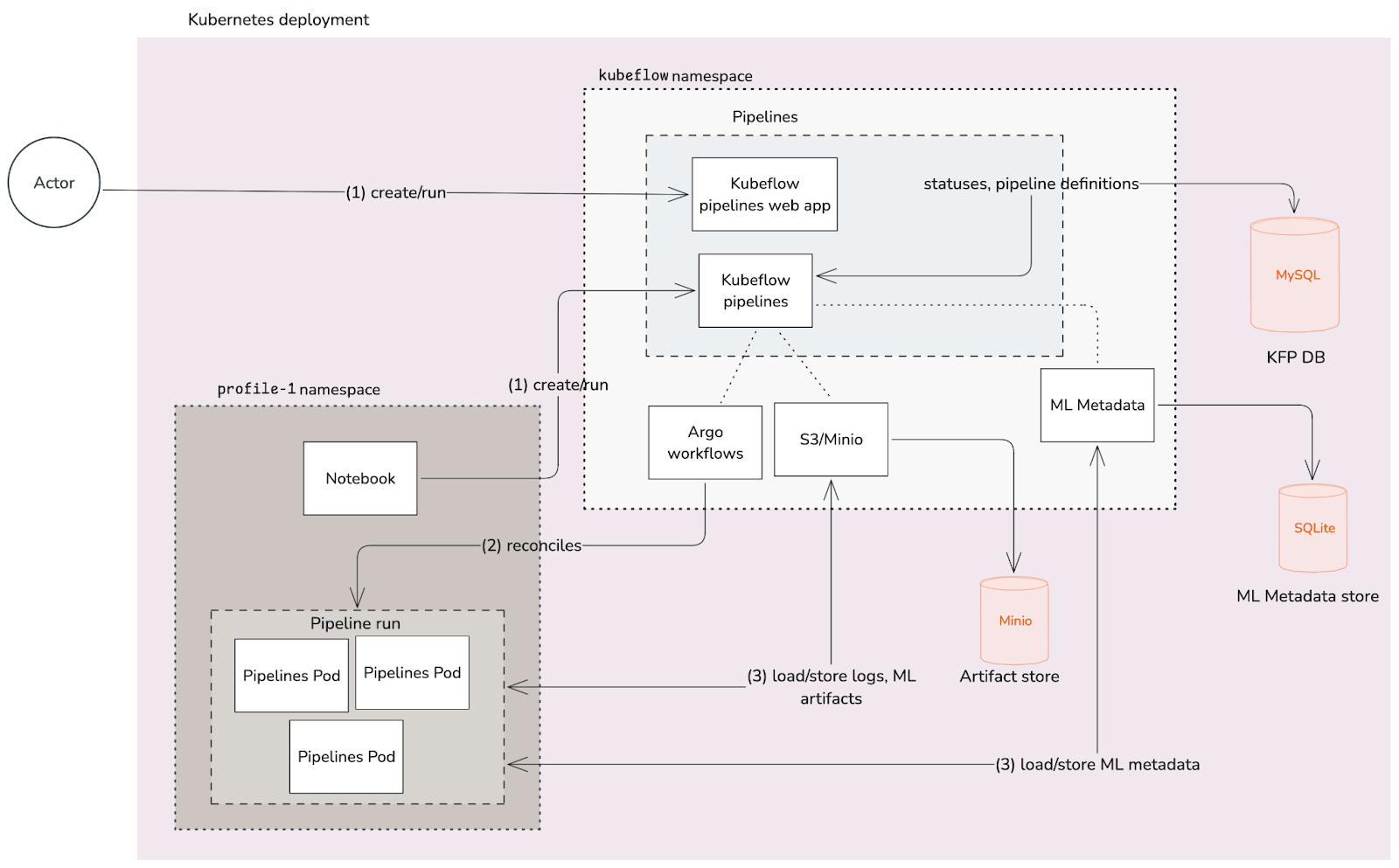

Pipelines

The pipelines component enables the development and deployment of portable and scalable ML workloads.

From the diagram above:

- The pipelines web app is the user interface for managing and tracking experiments, jobs, and runs.

- The pipelines component schedules workflows, visualization, multi-user management, and the API server that manages and reconciles the operations.

- Pipelines use Argo for workflow orchestration.

- Pipelines rely on different storage and database solutions for different purposes:

- ML metadata store: used for storing ML metadata, the application that handles it is called

ml-metadata. - Artefact store: used for storing logs and ML artifacts resulting from each pipeline run step, the application used for this is MinIO.

- Kubeflow pipelines database: used for storing statuses, and pipeline definitions. It is usually a MySQL database.

- ML metadata store: used for storing ML metadata, the application that handles it is called

Pipeline runs lifecycle

- A request from the user is received, either via the web app or from a notebook, to create a new pipeline run.

- The Argo controller will reconcile the argo workflows in the pipeline definition, creating the necessary Pods for running the various steps of the pipeline.

- During the pipeline run, each step may generate logs, ML metadata, and ML artifacts, which are stored in the various storage solutions integrated with pipelines.

While the run is executing and after completion, users can see the result of the run, and access the logs and artifacts generated by the pipeline.

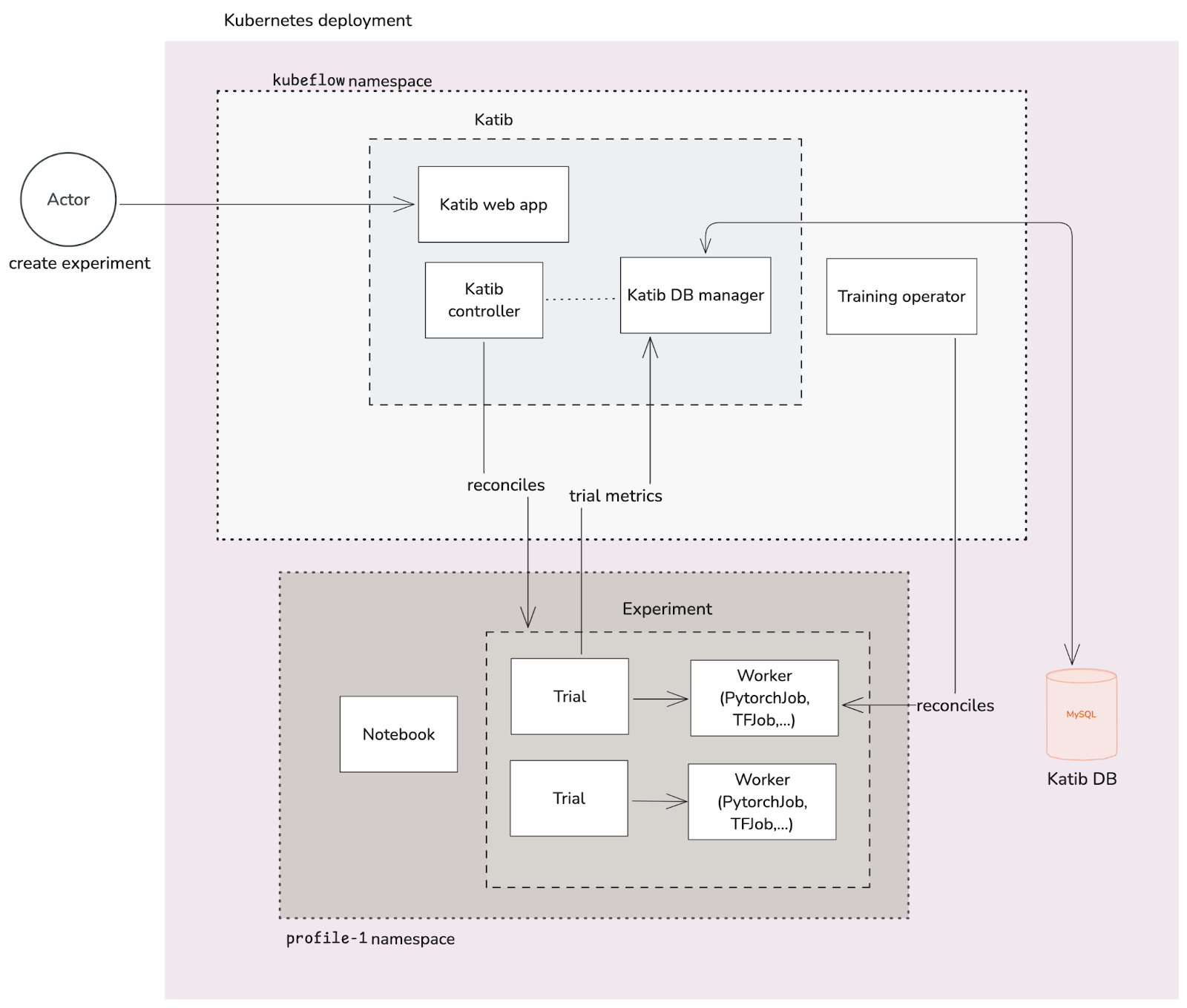

AutoML

Automated Machine Learning (AutoML) allows users with minimal knowledge of ML to create ML projects leveraging different tools and methods.

In CKF, AutoML is achieved using Katib for hyperparameter tuning, early stopping, and neural architecture search. The Training operator is used for executing model training jobs.

From the diagram above:

- The Katib controller is responsible for reconciling experiment CRs.

- Each experiment is comprised of:

- Trials: an iteration of the experiment, e.g., hyperparameter tuning.

- Workers: the actual jobs that train the model, for which the Training operator is responsible for.

- The Katib web app is the main landing page for users to access and manage experiments.

- The Katib DB manager is responsible for storing and loading the trial metrics.

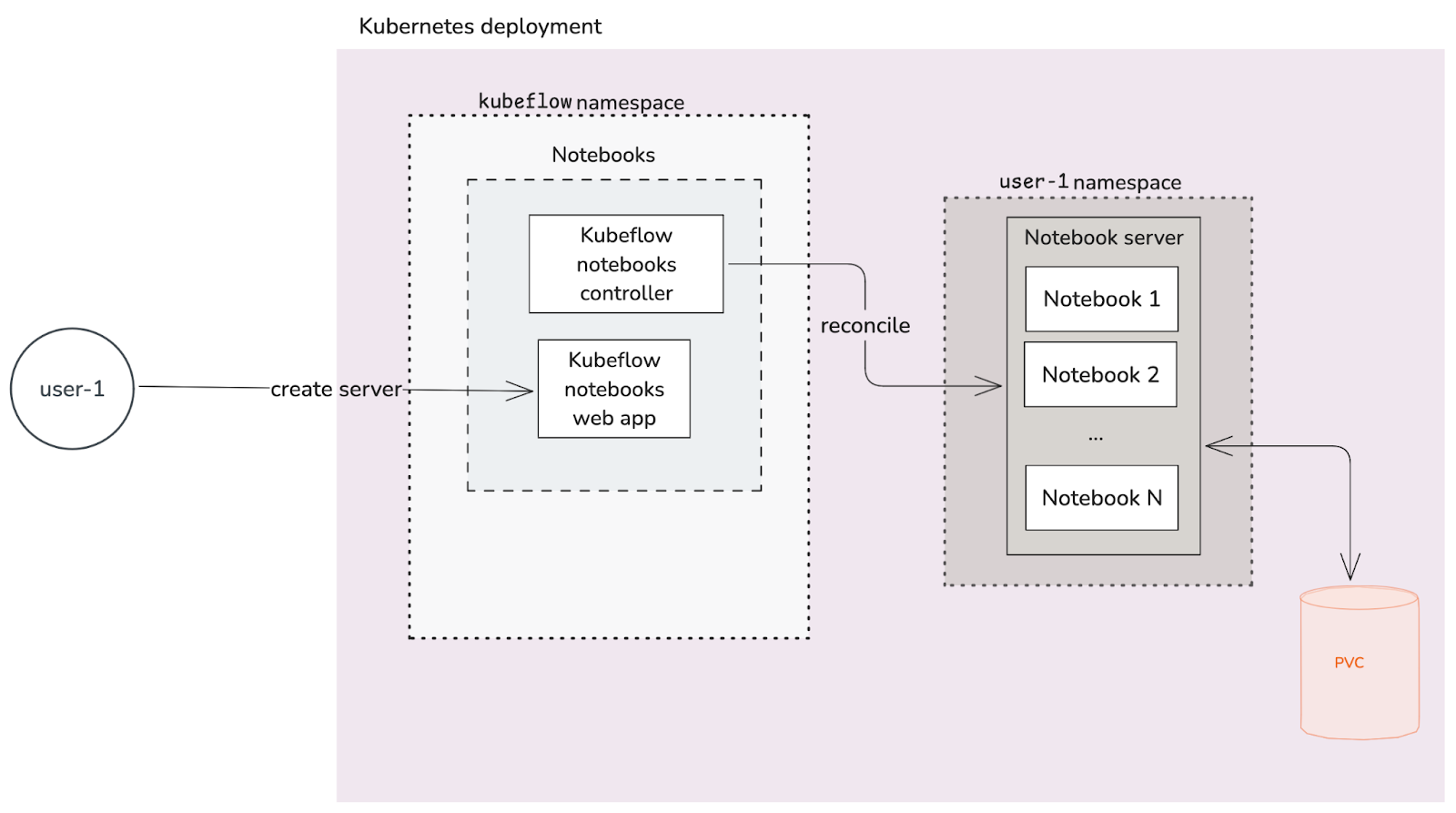

Notebooks

Kubeflow notebooks enable users to run web-based development environments. It provides support for JupyterLab, R-Studio, and Visual Studio Code.

With Kubeflow notebooks, users can create development environments directly in the Kubernetes cluster rather than locally, where they can be shared with multiple users, if allowed.

From the diagram above:

- The notebooks controller is responsible for reconciling the Notebook servers that must exist.

- Disambiguation: a notebook server is the backend that provides the core functionality for running and interacting with the development environments that are notebooks. For example, a Jupyter notebook server can hold multiple .ipynb notebooks.

- The notebooks web app is the landing page for users to manage and interact with the notebook servers.

- Each notebook server has a PersistentVolumeClaim (PVC) where the notebooks data are stored.

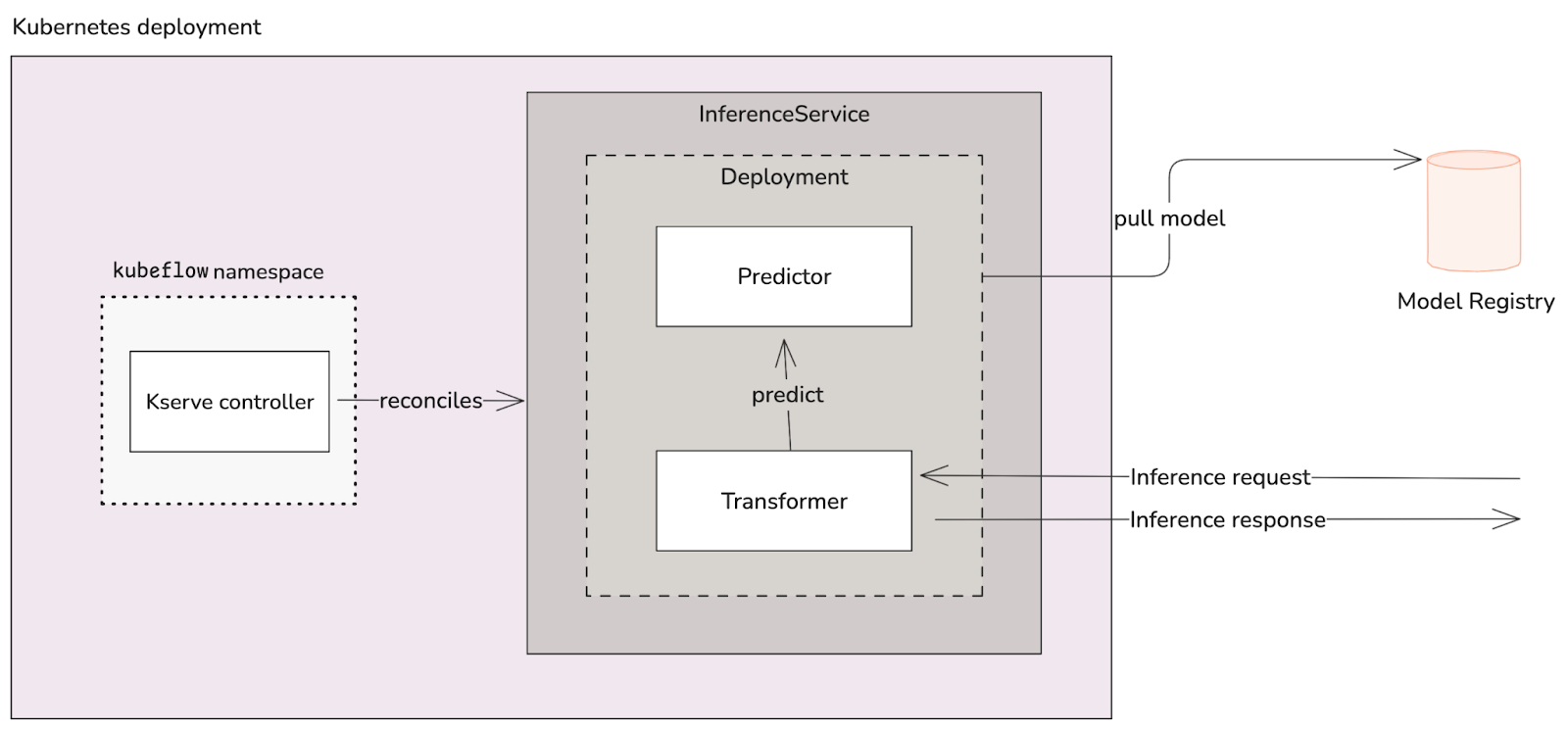

KServe

Model server

A model server enables ML engineers to host models and make them accessible over a network. In Charmed Kubeflow, this is done using KServe.

From the diagram above:

- The Kserve controller reconciles the InferenceService (ISVC) CR.

- The ISVC is responsible for creating a Kubernetes Deployment with two Pods:

- Transformer: responsible for converting inference requests into data structures that the model can understand. It also transforms back the prediction returned by the model into predictions with labels.

- Predictor: responsible for pulling pre-trained models from a model registry, loading them, and returning predictions based on the inference requests.

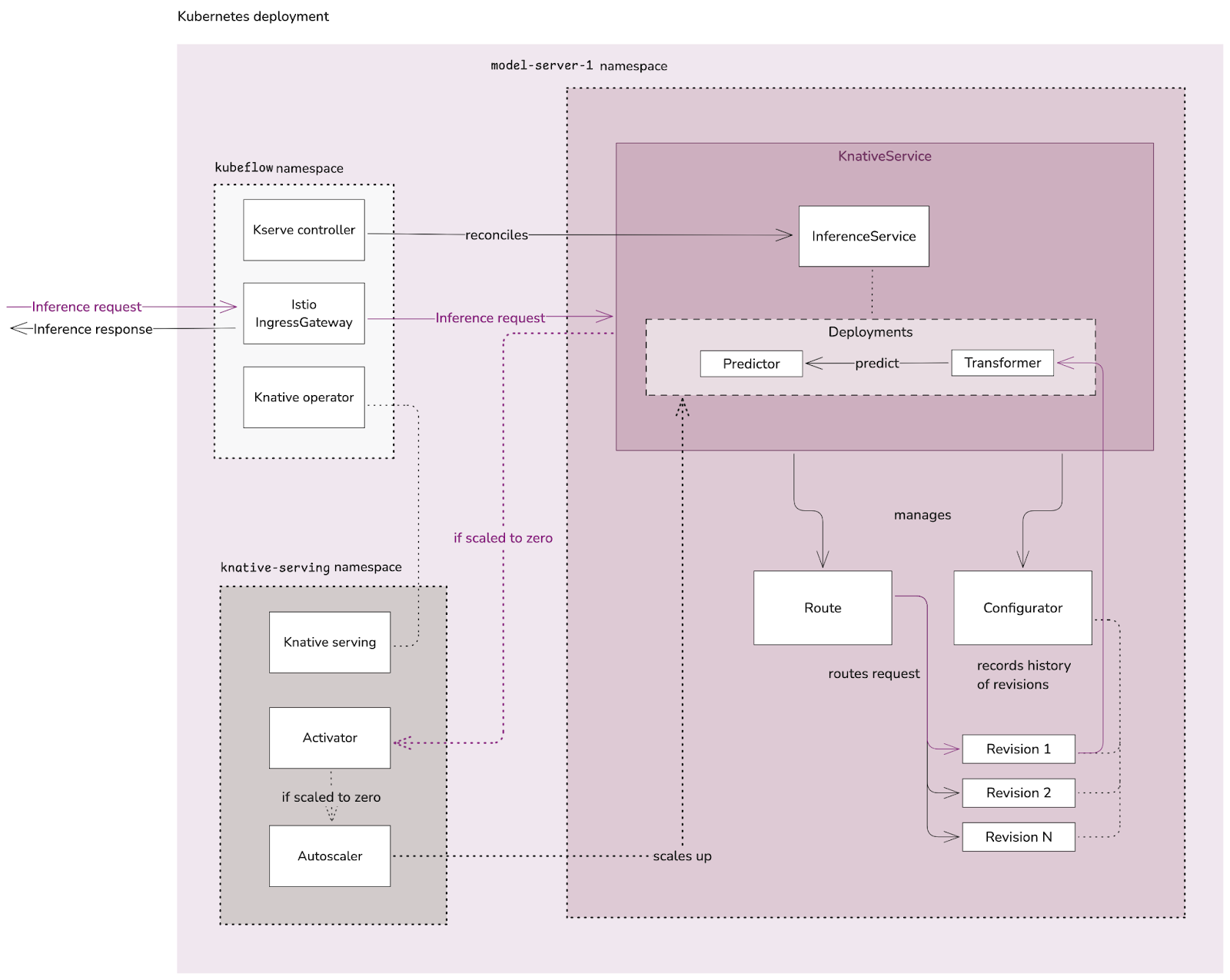

Serverless model service

When configured in “serverless mode”, KServe leverages the serverless capabilities of Knative. In this mode, components like Istio are leveraged for traffic management.

From the diagram above:

- The Istio IngressGateway receives an inference request from the user and routes it to the KnativeService (KSVC) that corresponds to the InferenceService, i.e., the model server, provided this resource is exposed outside the cluster.

- The KSVC manages the workload lifecycle, in this case the ISVC. It controls the following:

- Route: routes the requests to the corresponding revision of the workload.

- Configurator: records history of the multiple revisions of the workload.

- The Knative serving component is responsible for reconciling the KSVCs in the Kubernetes deployment. It includes the following components:

- Activator: queues incoming requests and communicates with the Autoscaler to bring scaled-to-zero workloads back up.

- Autoscaler: scales up and down the workloads.

Inference request flow

-

The Istio IngressGateway receives the inference request and directs it to the KSVC.

- If the ISVC is scaled down to zero, the Activator will request the Autoscaler to scale up the ISVC Pods.

-

Once the request reaches the KSVC, the Router ensures that the request is routed to the correct revision of the ISVC.

-

The ISVC receives the request at the Transformer Pod for request transformation.

-

Inference is performed at the Predictor Pod.

-

The response is then re-routed back to the user.

Integrations

CKF integrates with various solutions of the Juju ecosystem.

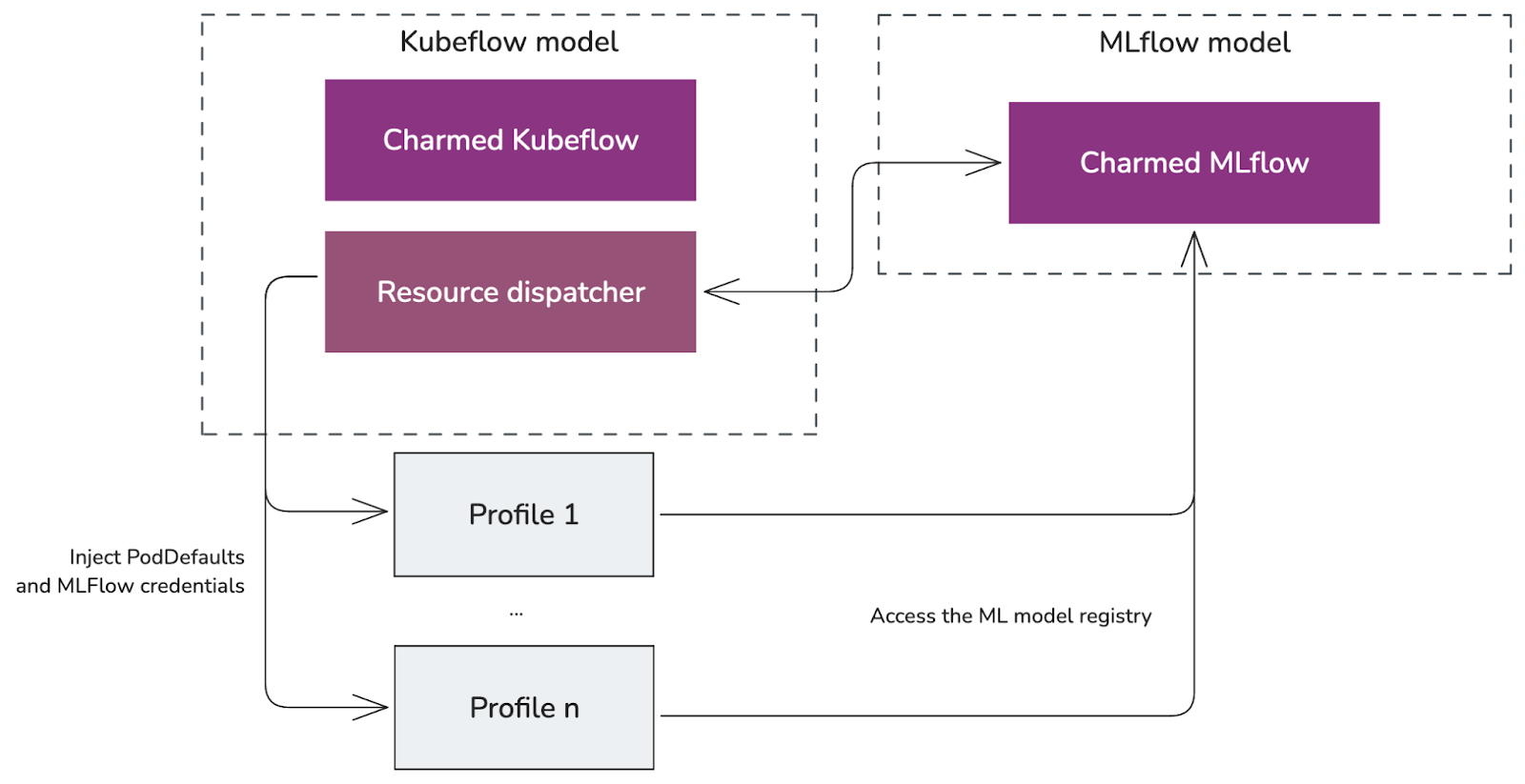

Charmed MLflow

CKF integrates with the Charmed MLflow bundle for experiment tracking and as a model registry.

From the diagram above:

- The resource dispatcher is a component that injects PodDefaults and credentials into each user Profile to be able to access the Charmed MLflow model registry.

- PodDefaults are CRs responsible for ensuring that all Pods in a labelled namespace get mutated as desired.

- Charmed MLflow integrates with the resource dispatcher to send its credentials, server endpoint information and S3 storage information, i.e., the MinIO endpoint.

- With this integration, users can enable access to Charmed MLflow from their notebook servers to perform experiment tracking, or access the model registry.

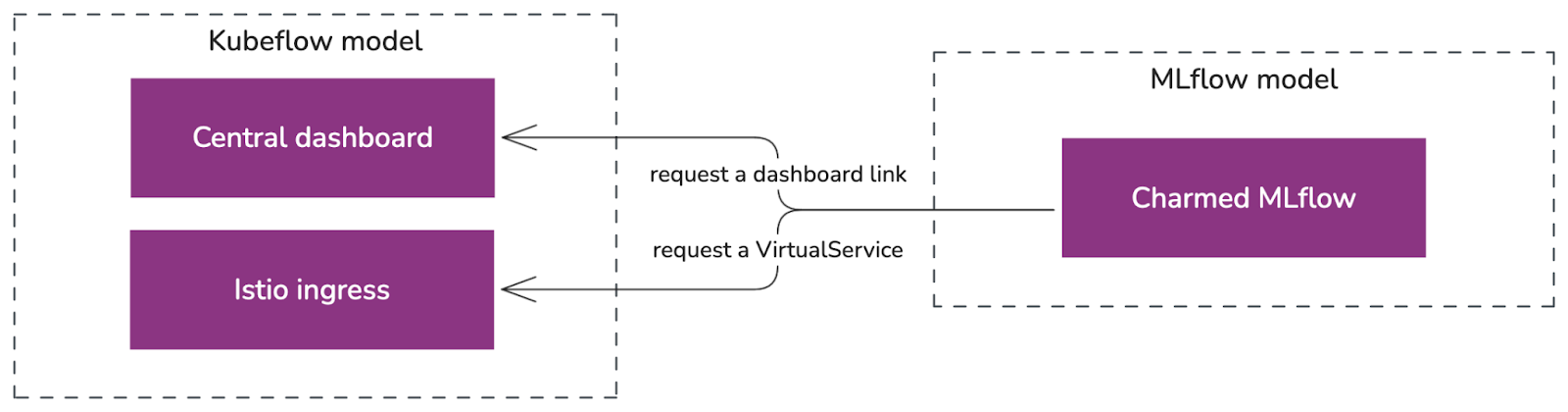

The Charmed MLflow is also integrated with the central dashboard and served behind the Charmed Kubeflow ingress:

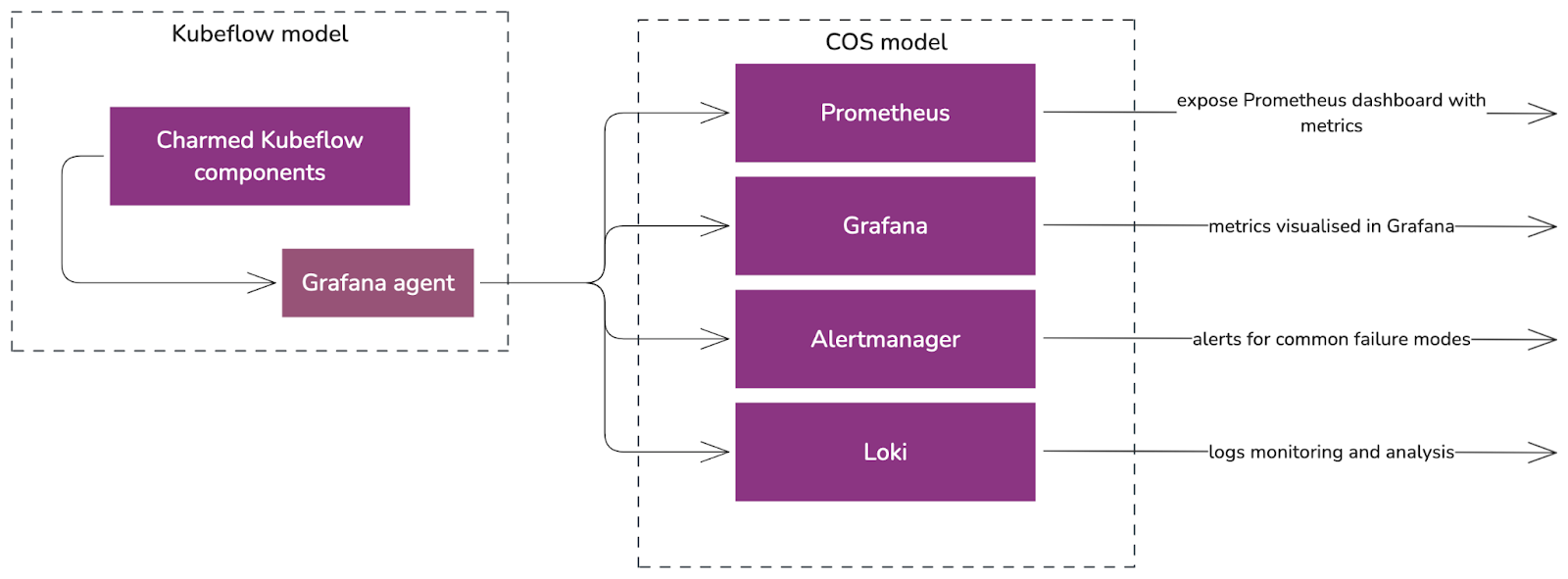

Canonical Observability Stack

To monitor, alert, and visualize failures and metrics, the Charmed Kubeflow components are individually integrated with Canonical Observability Stack (COS).

Due to this integration, each CKF component:

- Enables a metrics endpoint provider for Prometheus to scrape metrics from.

- Has its own Grafana dashboard to visualize relevant metrics.

- Has alert rules that help alert users or administrators when a common failure occurs.

- Integrates with Loki for log reporting.

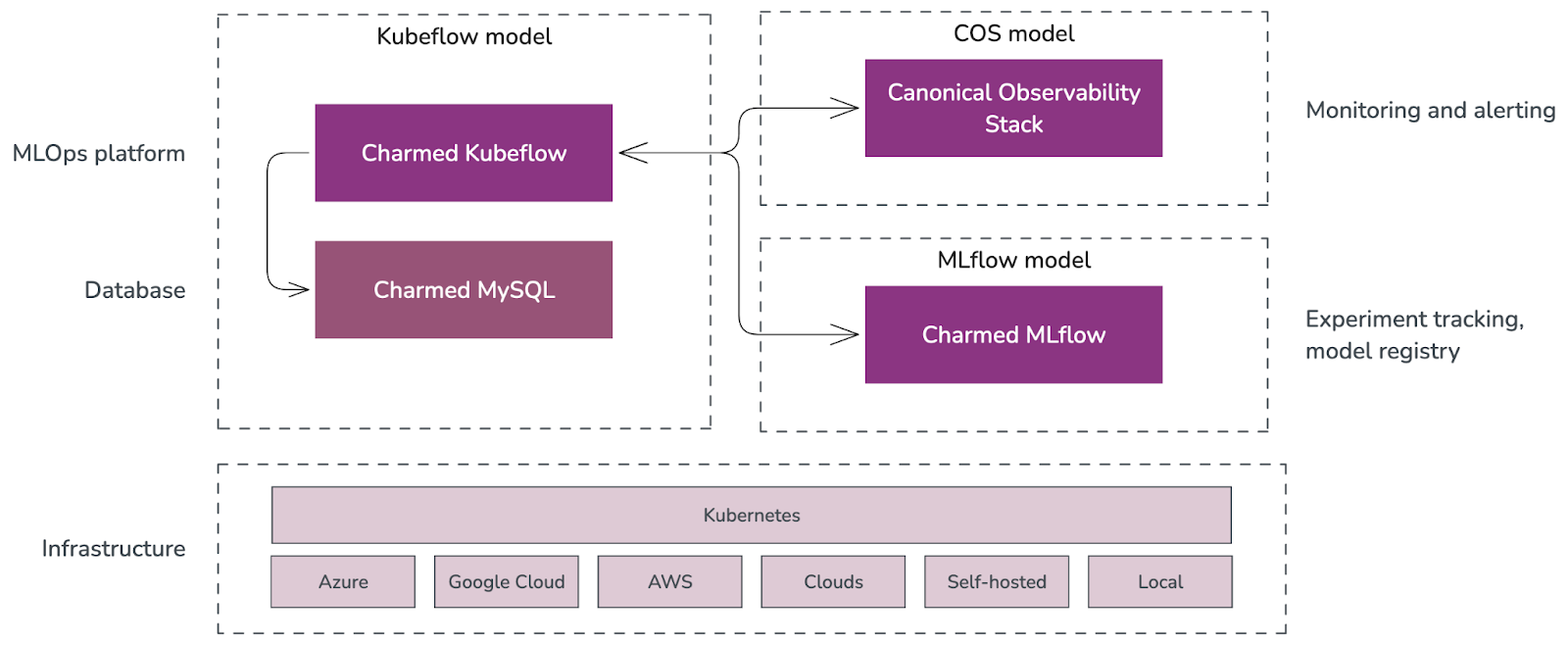

Canonical MLOps portfolio

CKF is the foundation of the Canonical MLOps portfolio, packaged, secured and maintained by Canonical.

This portfolio is an open-source end-to-end solution that enables the development and deployment of Machine Learning (ML) models in a secure and scalable manner. It is a modular architecture that can be adjusted depending on the use case and consists of a growing set of cloud-native applications.

The solution offers up to ten years of software maintenance break-fix support on selected releases and managed services.

From the diagram above:

- Each solution is deployed on its own Juju model, which is an abstraction that holds applications and their supporting components, such as databases, and network relations.

- Charmed MySQL provides the database support for Charmed Kubeflow and Charmed MLflow applications. It comes pre-bundled within the CKF and Charmed MLflow bundles.

- COS gathers, processes, visualizes, and alerts based on telemetry signals generated by the components that comprise Charmed Kubeflow.

- CKF provides integration with Charmed MLflow capabilities like experiment tracking and model registry.